Unlike ReactPHP HTTPClient, clue/buzz-react buffers the response and fulfills the promise once the whole response is received. Actually, it is a default behavior and you can change it if you need streaming responses. So, as you can see, the whole process of scraping is very simple: Make a request and receive the promise. Aug 31, 2018 Web Scraping Web scraping relies on the HTML structure of the page, and thus cannot be completely stable. When HTML structure changes the scraper may become broken. Keep this in mind when reading this article. Puppeteer can work in websites built with angular and react, you can take screenshots and make pdfs, but in term of speed Cheerio is a clear winner it is a minimalist tool for doing web scraping and you can combine it with other modules to make an end to end script that will save the output in CSV and return other things too. Is there a web scraping library for react native? Cheerio has one which would've been really helpful but it doesn't allow clicking on the page which I need. I was gonna use puppeteer because it would work perfect, but after some reading people have said it doesn't work because RN doesn't have all the node dependencies but those are a bit older. Since JavaScript is excellent at manipulating the DOM (Document Object Model) inside a web browser, creating data extraction scripts in Node.js can be extremely versatile. Hence, this tutorial focuses on javascript web scraping. In this article, we’re going to illustrate how to perform web scraping with JavaScript and Node.js.

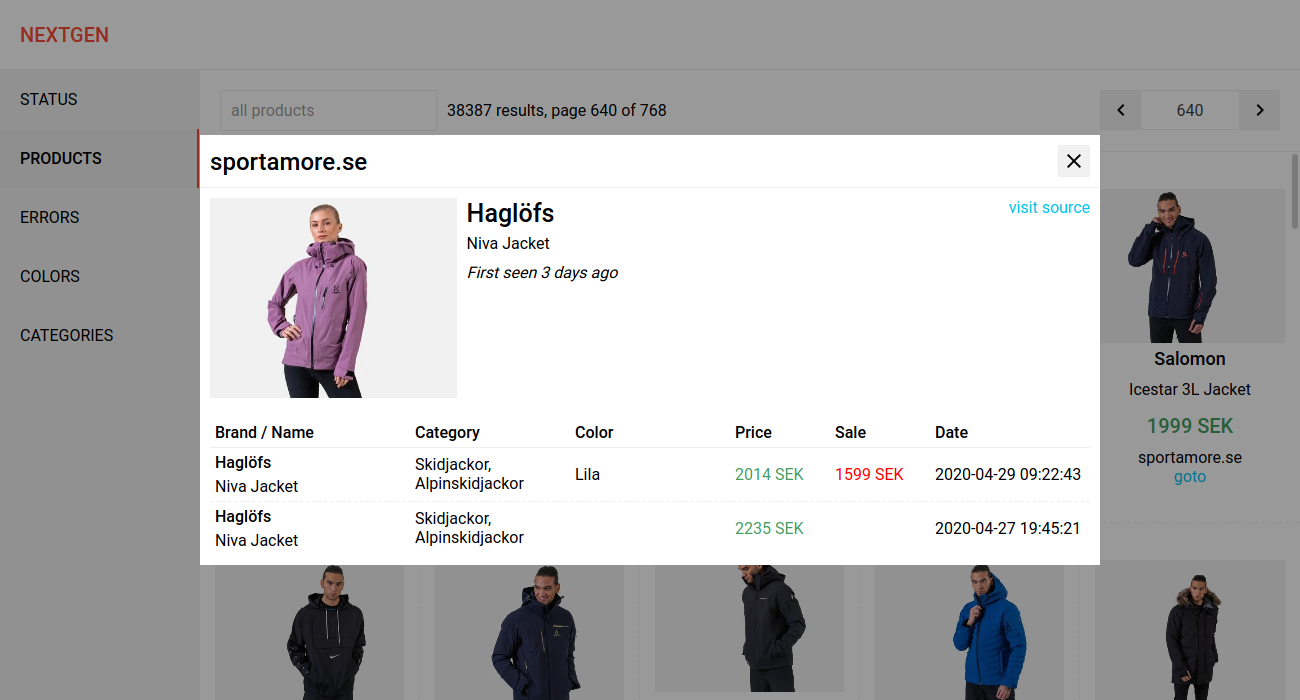

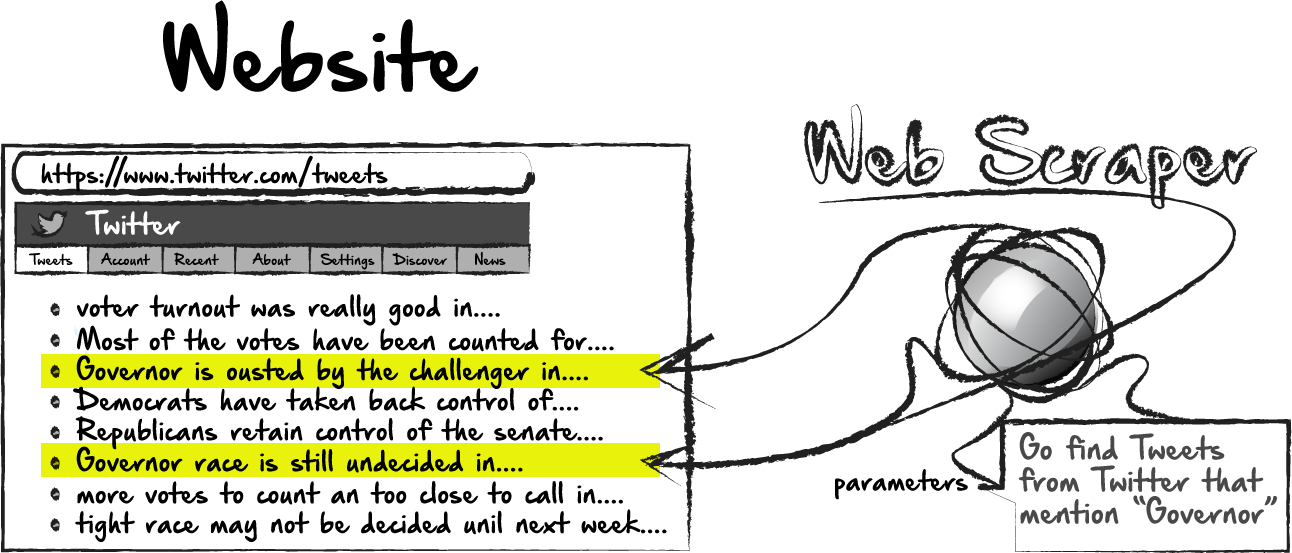

In a perfect world, every website provides free access to data with an easy-to-use API… but the world is far from perfect. However, it is possible to use web scraping techniques to manually extract data from websites by brute force. The following lesson examines two different types of web scrapers and implements them with NodeJS and Firebase Cloud Functions.

Frontend Integrations

This lesson is integrated with multiple frontend frameworks. Choose your favorite flavor 🍧.

Initial Setup

Let’s start by initializing Firebase Cloud Functions with JavaScript.

Strategy A - Basic HTTP Request

The first strategy makes an HTTP request to a URL and expects an HTML document string as the response. Retrieving the HTML is easy, but there are no browser APIs in NodeJS, so we need a tool like cheerio to process DOM elements and find the necessary metatags.

Download microsoft publisher medical templates. The advantage 👍 of this approach is that it is fast and simple, but the disadvantage 👎 is that it will not execute JavaScript and/or wait for dynamically rendered content on the client.

Link Preview Function

Lg rfbus driver. 💡 It is not possible to generate link previews entirely from the frontend due to Cross-Site Scripting vulnerabilities.

An excellent use-case for this strategy is a link preview service that shows the name, description, and image of a 3rd party website when a URL posted into an app. For example, when you post a link into an app like Twitter, Facebook, or Slack, it renders out a nice looking preview.

Link previews are made possible by scraping the meta tags from <head> of an HTML page. The code requests a URL, then looks for Twitter and OpenGraph metatags in the response body. Several supporting libraries are used to make the code more reliable and simple.

- cheerio is a NodeJS implementation of jQuery.

- node-fetch is a NodeJS implementation of the browser Fetch API.

- get-urls is a utility for extracting URLs from text.

Let’s start by building a

HTTP Function

You can use the scraper in an HTTP Cloud Function.

At this point, you should receive a response by opening http://localhost:5000/YOUR-PROJECT/REGION/scraper

Web Scraping React Interview

Strategy B - Puppeteer for Full Browser Rendering

What Is Web Scraping

What if you want to scrape a single page JavaScript app, like Angular or React? Or maybe you want to click buttons and/or log into an account before scraping? These tasks require a fully emulated browser environment that can parse JS and handle events.

Puppeteer is a tool built on top of headless chrome, which allows you to run the Chrome browser on the server. In other words, you can fully interact with a website before extracting the data you need.

Instagram Scraper

Web Scraping React Projects

Acer aspire 5349 sm bus controller driver download. Instagram on the web uses React, which means we won’t see any dynamic content util the page is fully loaded. Puppeteer is available in the Clould Functions runtime, allowing you to spin up a chrome browser on your server. It will render JavaScript and handle events just like the browser you’re using right now.

First, the function logs into a real instagram account. The page.type method will find the cooresponding DOM element and type characters into it. Once logged in, we navigate to a specific username and wait for the img tags to render on the screen, then scrape the src attribute from them.